A few months ago, we embarked on a journey to better define our core values. With our team poised to significantly grow in the coming months following our Series B fundraising, we thought this would be a good time to reflect on the things that mean the most to us. Our values matter a lot to our team: they don’t just get posted on the walls, we live by them. Here are our values:

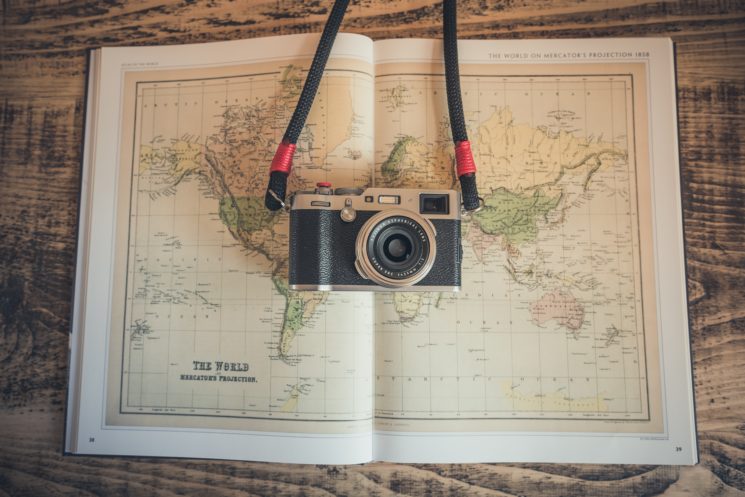

1. Going Places📍

Our customers are going places; we are too.

Busbud aims to create a more accessible world for everyone. We move people. We help you visit family and friends, grow your horizons through tourism, engage in economic activities and seek educational opportunities. We help you go to the places where you need to be. We empower travellers with essential information for millions of bus routes to around the world, improving access to affordable and sustainable transportation options.

At Busbud, our team is also set on an ambitious journey. Every day we are committed and motivated to help change travel and transportation by working on a product that is making a difference for travellers worldwide. We’re growing an international business, connecting daily with customers and partners everywhere around the world. We’re tackling a massive and challenging worldwide opportunity to make the bus more accessible. We’re also bringing our dynamism and leadership to help make bus part of the travel industry, and move mobility forward.

2. Independent Spirit 🎒

Learning and discovery are an integral part of the journey. New ideas and perspectives often live at the end of off-the-beaten paths.

We believe that travel forges your life: it broadens horizons, builds confidence, develops empathy and creates memories for a lifetime. We believe in travelling to places that challenge you to grow as a person. We also believe that the most fun and interesting adventures often happen when you decide to veer off the beaten path. Busbud helps travellers create their own journey by connecting them to millions of destinations worldwide.

At Busbud, our team is full of independent-minded people who love to travel, who are continually looking for solutions to help travellers. We offer a high-level of autonomy, so team members can find the best way to have the most impact, and of flexibility, in terms of how to best organize their work. We value initiative, ownership and trust. We encourage critical thinking and forging our own opinions; different perspectives are welcome. We hold ourselves accountable to back up our ideas with facts and data, and understand that innovation emerges as a result of this constructive dialogue. We cultivate our curiosity, teach ourselves and each other new things, and move along a path of continuous learning.

3. Sustainability 🌱

The bus is one of the most sustainable ways to travel; we also embrace sustainability in our business, workplace and community.

As travellers, we care about our planet’s health. Buses are one of the greenest form of motorized transportation, with a much smaller environmental impact than other modes. By making it easier than ever to take the bus, Busbud is helping to shift travel to greener modes of collective transportation. We can extend Busbud’s impact and go beyond helping travellers: we can leave this world “a little better than we found it”, and that is very exciting. We’re proud to promote bus travel and we’ll continue to help make bus part of the travel industry.

As a company, we are committed to finding sustainability in our activities. We promote actions that are sustainable for the environment. We are also efficient in how we work. We manage the growth of our business in a way that creates value for shareholders, bus partners, customers, team members and other community stakeholders. We strive to build a sustainable workplace, in which our team members can grow their careers. This means work-life balance, with a sustainable rhythm that continually drives the business forward while allowing our team members to have a life beyond work. It also means fostering diversity and inclusion to foster a team with different perspectives – helping to make our company and product vibrant and growing with new ideas.

4. Super Helpful 🙌

Busbud helps travellers have an amazing experience; our team members help each other succeed by collaborating together.

Busbud was originally conceived as your travel buddy (ie. the “bud” in Busbud), a sort of companion to your travel adventures. Helpfulness was always a core part of our mission. We aim to provide travellers with as much information (ie. all the best schedule options from the most popular operators, as well as the amenities, classes and ticket types available), in as many languages and with as many payments options possible. We build a convenient experience that takes the hassle out of your booking process. Of course, unexpected events can happen while travelling. Our top-rated customer experience team is available to help you and will go the extra mile to sort out any issues and ensure you have a smooth journey. We also try to be helpful to our bus operator partners, earning their trust and working hand-in-hand to create a better experience for travellers every day. This starts with listening and empathy.

At Busbud, our team strongly believes in collaboration and collegiality. We’re creating a collaborative work environment where team members help each other out, and put the company and the mission first. We particularly value team members who mentor and help others, both at Busbud and in our community.

Aligning values with vision

As we started the brainstorm to define our values, here are some of the attributes that we wanted them to have:

- Be specific and meaningful to Busbud, not generic to any company.

- Be a reflection of our identity and who we are, but also somewhat aspirational, allowing us to raise the bar over time.

- Resonate both internally with our team and externally with our customers, partners and other community stakeholders.

At the same time, we also wanted to align our values with our mission and vision. As you can read on our About page, our mission is affordable, accessible, sustainable travel. Our vision is an easy and seamless bus travel experience. I also recently published a in-depth blog post, The Next Leg of the Journey, laying out in more detail our vision for the coming years to build a more connected world. We think our new values go a long way to help us align with our mission.

We’re very happy to have values that will serve as a compass to guide us in the future and will help us communicate what we are about to future hires, travellers and partners. We look forward to bringing our values into action in the coming year.

Onwards,

LP